AI in Cyber Security: Why Hackers Are Winning the Arms Race — and What Defenders Must Do

In the evolving battlefield of cybersecurity, Artificial Intelligence (AI) has now decisively and firmly entered the fray. But, unlike previous technological shifts this one is not delivering equal advantage to both sides. AI is now proving vastly more transformative for attackers than defenders.

While defensive tools benefit from smarter detection and triage, attackers are now using AI not just to find vulnerabilities, but to actively exploit them at scale, with speed, and increasingly without human oversight.

This is the heart of the current cyber revolution.

Back in April at CyberUK, I heard a panelist say something that really stuck: ‘You can’t AI your way out of an AI-enabled attack.’ That phrase has echoed through nearly every briefing since.

Exploits on Autopilot: How AI Took the Wheel

Once upon a time, exploitation was the exclusive domain of skilled human hackers. Now, it's increasingly an automated process: one where AI doesn’t just assist, it executes. The technical heavy lifting is being handed off to machines that don’t need lunch breaks or sleep cycles.

Cybercriminals have long used automation to discover exposed systems, using tools like Shodan or mass-scan frameworks. But exploitation — the part where attackers interpret the system's response and adjust their attack — used to require a human.

And here’s the twist: AI has changed that. It can:

- Read vulnerability feeds in real time

- Launch exploits against specific systems

- Use feedback (telemetry) from the target to adapt the exploit dynamically

- Confirm success and escalate access — all without human input

This turns AI hacking into more than automated scanning, it becomes intelligent and adaptive breach automation. By the time human operators step in, the door is already open.

The Rise of the Hands-Free Hack

It’s not just that AI can exploit systems, it can orchestrate entire campaigns. From reconnaissance to payload delivery, the kill chain is becoming a hands-free operation. And in this new paradigm, the humans arrive after the breach.

The term AI hackers is no longer theoretical. We’re now seeing structured campaigns where:

- AI tools monitor for fresh CVEs

- Exploits are crafted and launched autonomously

- Systems that respond a certain way are flagged for human follow-up

- Initial access is secured by machines, not humans

This is the real reason AI and cyber security are trending together, and why the concern isn’t just academic. It’s not just that AI makes hacking faster. It makes it hands-free.

Blue Team’s AI: Helpful, but Not Heroic

Yes, defenders have AI. But it's more co-pilot than pilot — augmenting, not replacing. While red teams are automating offence, the blue team is still stuck refining alerts and tuning dashboards. The gap isn’t in tools — it’s in leverage.

There are genuine applications of AI security tooling in defensive security:

- Fingerprinting and behavioural baselining

- Enhancing signal-to-noise in security logs

- Automating triage for overworked security teams

- Recognising early indicators of compromise

Still, let’s be honest: these tools tend to be augmentative, not transformational. In AI cyber security, the defensive side is still heavily reliant on configuration, patching, and judgement. It’s still fundamentally human.

Testing the Testers: When AI Is Both Weapon and Target

AI is creating new attack surfaces, and new ways to probe them. Just as defenders turn their sights on model vulnerabilities, adversaries are arming AI systems to carry out the probing themselves. It’s red team vs red team, and one of them doesn’t blink.

Pentesting AI systems (such as language models, computer vision tools, or data classifiers) is becoming a discipline in its own right. Increasingly, this form of AI penetration testing is being recognised as a necessary part of modern red teaming. Red teams are beginning to treat AI models like any other software surface: vulnerable, targetable, and exploitable.

Whether it’s prompt injection, data poisoning, or hijacking of outputs, the AI stack is no longer immune to offensive testing and AI pentest teams are growing as a result.

When the Chatbot Joins the Attack Chain

What started as harmless prompt-engineering party tricks has grown up fast. Today, large language models like ChatGPT are being folded into phishing kits, social engineering playbooks, and even malware scaffolding. The chatbot’s been weaponised, and it now speaks fluent exploitation.

Chat GPT hacking is being used to:

- Write evasive phishing emails

- Automate script generation

- Manipulate dialogue-based authentication

- Assist in pretexting attacks with real-time responses

Who’s Watching the Model?

AI doesn’t just pose risks from the outside, it creates new risks from within. Models can be manipulated, poisoned, or subverted, and most organisations aren’t even watching. When the system making decisions becomes the vulnerability, the security conversation shifts entirely.

Cybersecurity for AI isn't just about protecting the infrastructure. It also raises broader concerns around artificial intelligence data security, especially when sensitive systems rely on flawed or exposed models. It’s about ensuring that models behave as intended under pressure, manipulation, or malicious input.

AI Can’t Save You from Slack Patch Discipline

Here’s the uncomfortable truth: most breaches still come down to unpatched systems and sloppy configurations. No amount of AI wizardry can cover for missing updates and flat networks. The fundamentals haven’t changed, they’ve just become less optional.

"Eat your greens" is the advice from the GCHQ

It might sound like the same old advice — because it is. But that doesn’t make it wrong. The advice may be deceptively simple, but it remains unshakably true:

- Patch consistently

- Lock down exposed services

- Monitor for misconfiguration

- Reduce identity sprawl

- Benchmark your systems continuously

Automation, the Old-Fashioned Way

While attackers dream up novel AI vectors, smart defenders are returning to basics — but automating them with intent. Visibility, hygiene, patch compliance: it’s not glamorous, but it’s scalable. And right now, that’s what matters most.

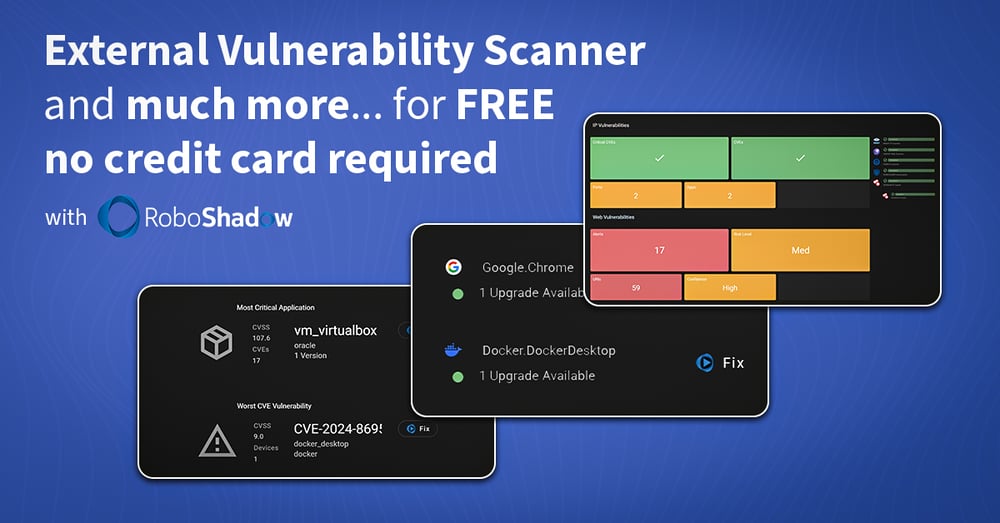

At RoboShadow, we’re focused on automating the stuff that actually keeps you safe. Our platform gives full visibility across:

- Your external attack surface

- Internal misconfigurations and vulnerabilities

- Microsoft 365 posture and compliance benchmarks

- Patch and update tracking

- End-to-end asset visibility

This Isn’t a Smarter Arms Race — It’s a Cleaner One

Forget outsmarting attackers with neural nets. The real victory comes from closing the gaps they count on: misconfigurations, exposed services, and slow response times. The race isn’t to be the most advanced. It’s to be the least hackable.

The most dangerous myth in AI cyber security is that defenders need to “out-AI” the attackers.

You don’t. You need speed, consistency, and coverage — especially at the hygiene level. That’s where AI offers the most real-world value to defenders: by helping us do the basics better, faster, and with fewer people.

Let attackers build smarter tools. Defenders win by removing the easy paths in the first place.

If you have any questions, or want to talk about this further please don't hesitate to reach out to me directly - terry@roboshadow.com

For suggested further reading, we recommend this report produced by the NCSC: Impact of AI on Cyber Threat from now until 2027

I’m lucky to have worked in technology all over the world for large multi-national organisations, in recent years I have built technology brands and developed products to help make technology that bit easier for people to grasp and manage. By day I run tech businesses, by night (as soon as the kids have gone to bed) I write code and I love building Cyber Security technology.